ChatGPT-5: The Complete Guide — How OpenAI’s Latest Model Works, What It Can Do, and Why It Matters

This Comprehensive, easy-to-understand guide explains GPT-5: capabilities, applications, ethics, in clear, practical language, we covers how GPT-5 works, what it can do, real-world applications across industries, ethical considerations, limitations, and the potential future of the (OpenAI's latest model).

Table of contents

- Introduction: The Rise of AI and GPT-5’s Arrival

- What Is GPT-5?

- The Evolution of OpenAI’s GPT Models

- How GPT-5 Works (Plain Language)

- Core Capabilities of GPT-5

- How GPT-5 Communicates Like a Human

- Practical Applications Across Industries

- Comparing GPT-5 with Previous Generations

- Ethical Considerations and Limitations

- Deploying GPT-5: Best Practices and Implementation

- The Future of GPT Technology

- Conclusion

1. Introduction: The Rise of Artificial Intelligence and GPT-5’s Arrival

Artificial intelligence has moved from niche research labs into everyday tools that affect how people write, work, create, and learn. Among the many breakthroughs in recent years, the GPT series from OpenAI stands out as a defining line of progress in natural language processing.

GPT-5 represents a major step in that lineage. It combines advanced language understanding with broader context handling, improved reasoning, and refined safety controls. This article provides an approachable, deeply informative look at GPT-5 for readers who want both clarity and substance.

2. What Is GPT-5?

GPT-5 is a large language model created by OpenAI. At its core, it is a computer program trained to predict and generate human-like text.

Unlike simple chatbots, GPT-5 can handle complex instructions, write code, explain ideas, summarize long documents, translate languages, and even work with images and structured data in some configurations.

Design-wise, GPT-5 follows the transformer architecture lineage that underpins modern language models. What makes GPT-5 notable is its combination of broad knowledge, improved reasoning, and tools that let it interact safely with users — such as web access tools, memory, and controlled code execution environments (in some product versions).

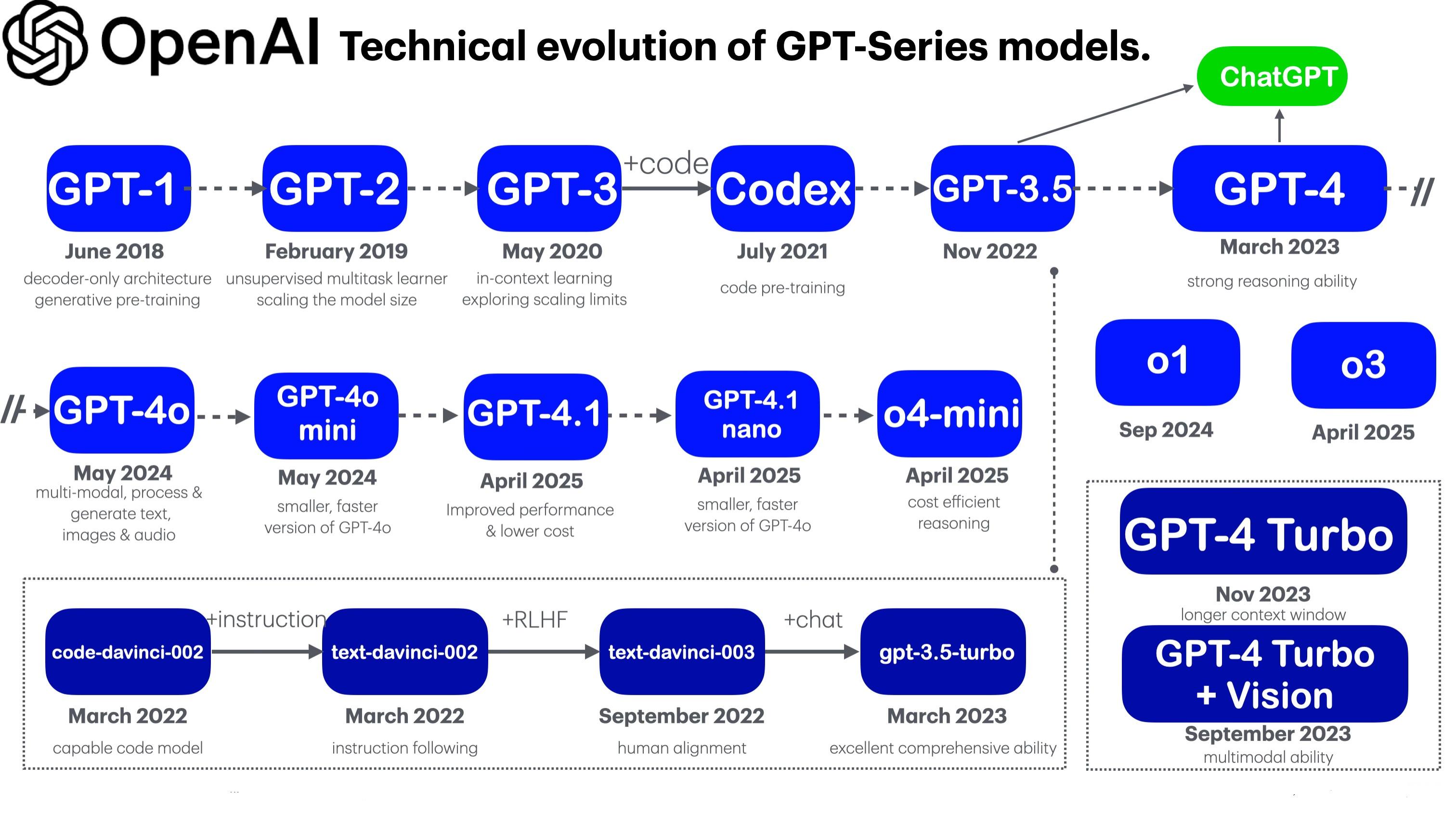

3. The Evolution of OpenAI’s GPT Models

To understand GPT-5, it helps to see the arc of progress that led to it.

From GPT-1 to GPT-4: a quick timeline

GPT-1 introduced the idea that a single, large transformer trained on a broad corpus could learn a wide range of language tasks with simple fine-tuning.

GPT-2 scaled that idea up, demonstrating that larger models trained on more data could generate coherent paragraphs of text. That release sparked wide public interest and debate over risks and benefits.

GPT-3 and GPT-3.5 greatly expanded scale and capability, supporting zero-shot and few-shot learning. GPT-3 made it possible for developers to use models as versatile language APIs.

GPT-4 improved reasoning, context retention, and reliability, and introduced more robust safety mitigations and multimodal abilities (handling images alongside text in certain variants).

Each step improved context window size (how much input the model can consider at once), the model’s ability to follow nuanced instructions, and safety behaviors. GPT-5 builds on these advances, with a stronger emphasis on controlled reasoning, more natural conversation, and integration into practical workflows.

4. How GPT-5 Works (Simplified Technical Explanation)

This section avoids dense math but gives enough technical detail for readers who want a clear sense of how the model operates.

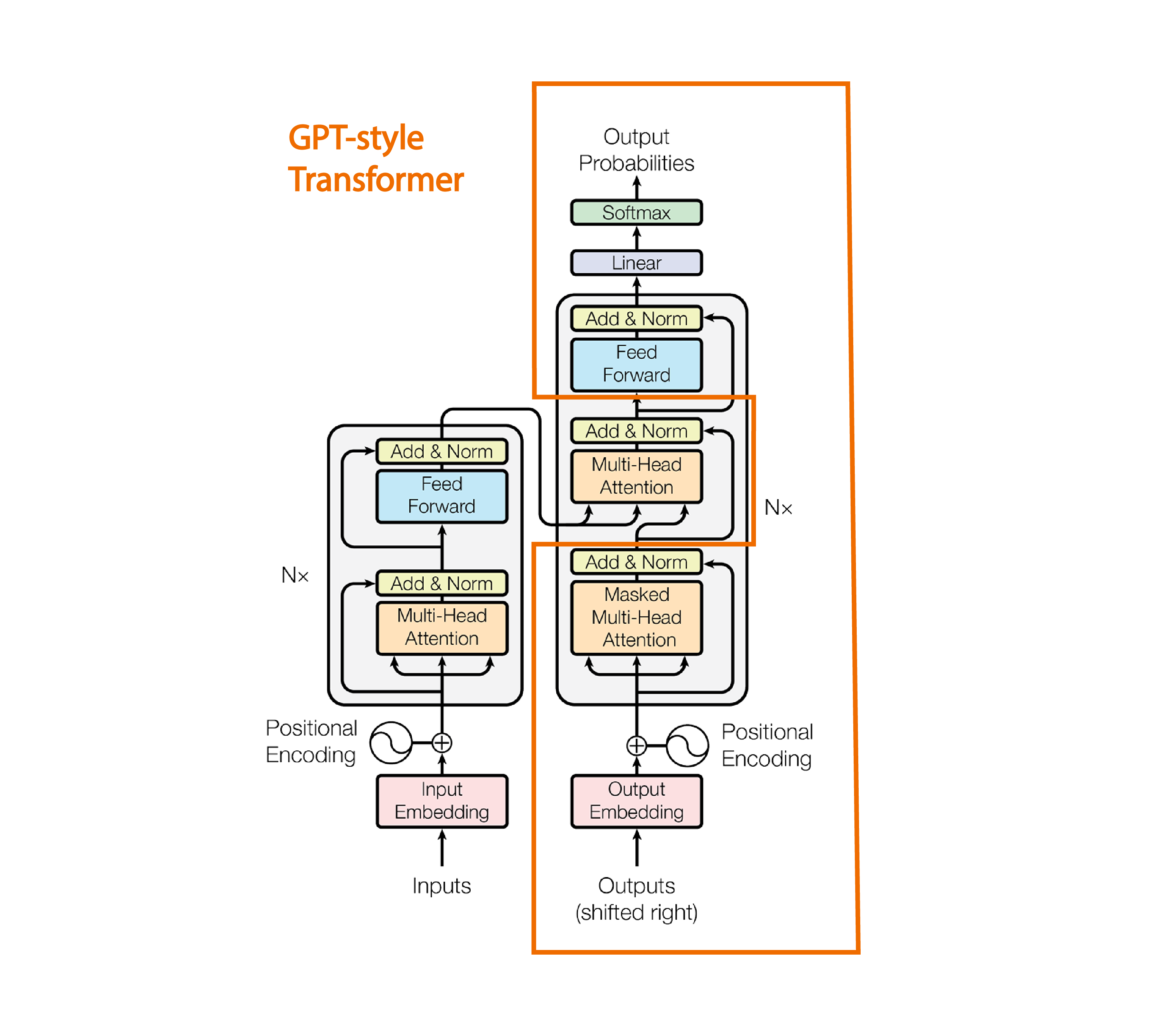

Transformers and language modeling

GPT-5 is built on the transformer concept — a neural network design that uses attention mechanisms to weigh the importance of different parts of the input when producing output. Instead of treating text as a strict left-to-right chain, transformers compute relationships between every token (piece of text) and decide what to focus on.

Training: learning from large-scale text

Training a model like GPT-5 involves showing it a massive amount of text and asking it to predict the next word or token. Over many passes, the model adjusts its internal parameters to make better predictions. The dataset is diverse and curated to cover many domains: books, articles, websites, code repositories, and other public text.

Crucially, modern training also includes techniques to shape behavior: supervised fine-tuning (training on examples that show the desired output), reinforcement learning from human feedback (RLHF), and safety filters. RLHF lets humans rank different model outputs, teaching the model which styles of reply are more helpful or safer.

Reasoning, context, and memory

GPT-5 improves on earlier models in handling longer context — meaning it can consider larger chunks of a conversation or document when answering. That leads to better coherence and fewer repeated mistakes. Additionally, configurations of GPT-5 may include "memory" modules that let the model remember user preferences or project specifics across sessions, improving personalization.

Multimodality and tools

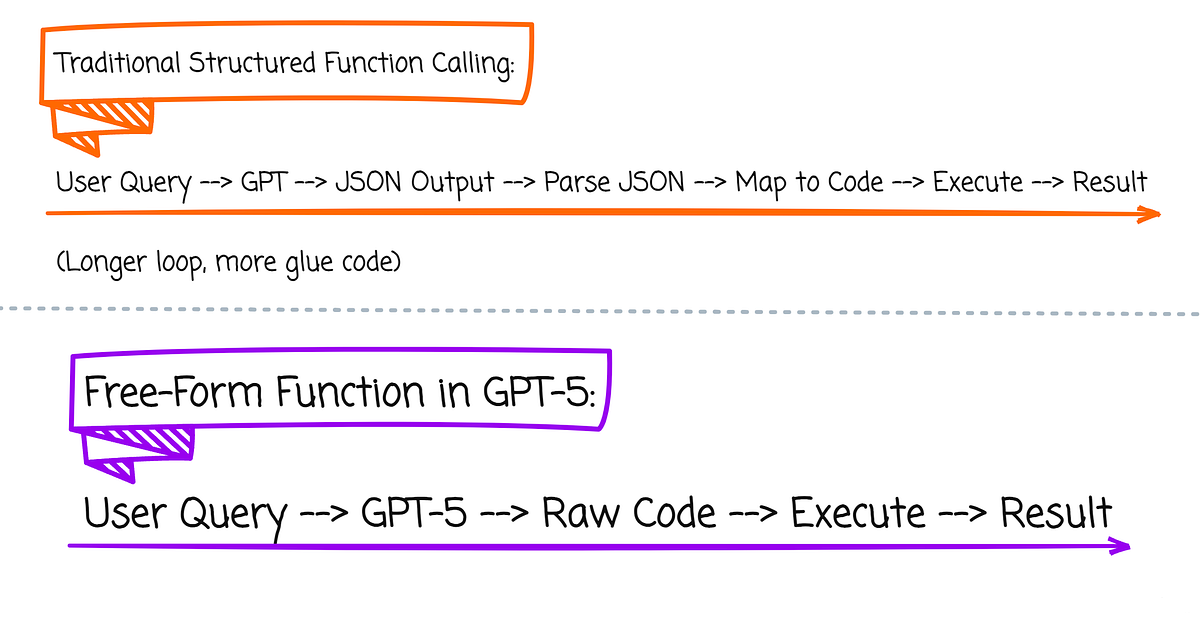

Some GPT-5 deployments are multimodal — able to accept images or other structured inputs in addition to text. Other deployments connect to tools: web search, calculators, code execution sandboxes, and file viewers. These tool integrations help ensure the model’s outputs are grounded in more accurate, up-to-date information and reduce hallucinations (confident but incorrect answers).

5. Core Capabilities of GPT-5

GPT-5 is a general-purpose AI assistant with strong capabilities in multiple domains. Below are the major functional areas where GPT-5 excels.

Natural language generation

GPT-5 can produce high-quality text: articles, blog posts, emails, creative stories, and more. It follows tone, style, and structural cues and can optimize for length, readability, or keyword presence for SEO without obvious stuffing.

Summarization and comprehension

The model can read long documents and summarize them concisely or extract the most important points. That makes it useful for generating executive summaries, study notes, and content briefs.

Code writing and debugging

GPT-5 is proficient at writing code across popular languages, suggesting fixes, explaining code snippets, and proposing architecture ideas. It can convert pseudocode to working code and explain algorithmic trade-offs in accessible language.

Research and fact synthesis

When combined with web access tools, GPT-5 can gather and synthesize information from multiple sources, producing citations, comparisons, and up-to-date summaries. In closed environments without web access, GPT-5 still draws on its training data and recent knowledge where appropriate.

Translation and localization

GPT-5 supports accurate translation across many languages and can adapt text to local cultural nuances for more natural localization.

Conversational assistants and workflows

GPT-5 can be embedded into apps and services as a conversational layer that automates tasks, answers customer questions, drafts responses, or triages support requests.

Creative tasks

From brainstorming names and marketing copy to generating story outlines or poetry, GPT-5 helps unlock creativity while providing structure and iteration speed.

6. How GPT-5 Communicates Like a Human

One of GPT-5’s distinguishing qualities is its ability to produce natural, human-like conversation while maintaining helpfulness and clarity. Here are the design ideas that support that behavior.

Instruction-following and clarity

GPT-5 is trained to follow explicit instructions. That means users can ask the model to "write a 300-word summary" or "explain this concept with a simple analogy" and expect consistent results. Clear prompts lead to useful answers.

Tone-matching and style adaptation

The model adapts to the required tone — formal, casual, technical, or friendly. It can imitate writing styles while avoiding direct copying and generating content that remains original and contextually appropriate.

Conversation memory and personalization

When enabled, memory features allow GPT-5 to recall user preferences, previously provided facts, or repeated tasks. This makes follow-up interactions smoother and reduces the need for repetition from the user’s side while respecting privacy controls set by the platform.

Reducing hallucinations through tools and grounding

Despite improvements, language models can still hallucinate — invent facts or provide incorrect specifics. GPT-5 mitigates this by integrating tools (web search, calculators, file readers) where available and by expressing uncertainty when a confident answer isn't warranted, encouraging verification rather than blind reliance.

7. Practical Applications Across Industries

GPT-5’s versatility makes it useful across nearly every modern industry. Below are practical examples and short case sketches showing how organizations might use the model.

Education

Teachers and students can use GPT-5 to generate lesson plans, create practice questions, explain complex topics in simpler terms, and provide study summaries. With proper oversight, it can accelerate content creation and personalized learning pathways.

Healthcare (informational)

GPT-5 helps with drafting patient-facing educational material, summarizing medical literature for clinicians, and helping administrative staff draft letters and documentation. It is important to note that GPT-5 is not a substitute for clinical judgment — medical decisions must be made by qualified professionals.

Business and marketing

From ad copy to market research summaries, GPT-5 accelerates content operations. It can automate routine communications, generate ideas for campaigns, produce product descriptions, and even help test A/B variants for messaging.

Software development and operations

Developers use GPT-5 to prototype code, write unit tests, generate configuration templates, and document APIs. Operations teams use it to produce runbooks, incident summaries, and technical documentation faster.

Legal and compliance

GPT-5 can draft plain-language explanations of legal documents, summarize changes in legislation, or create checklists for compliance processes. Legal professionals must review outputs and ensure jurisdiction-specific accuracy.

Creative industries

Writers, filmmakers, and game designers use GPT-5 for outlines, character development, dialogue generation, and iterative brainstorming. Editors can use the model to speed up research and fact-checking during development.

Finance

Analysts use GPT-5 to summarize reports, generate scenario narratives, and draft earnings-note summaries. When combined with quantitative tools, it can be part of a hybrid human+AI research pipeline.

8. Comparing GPT-5 with Previous Generations

This section highlights practical differences between GPT-5 and earlier models so readers can understand the real-world impact of upgrades.

Context and coherence

GPT-5 handles longer conversations and documents with higher coherence. That reduces the need to repeatedly remind the model of earlier context.

Instruction-following and reliability

Through improved fine-tuning and human feedback loops, GPT-5 tends to follow instructions more precisely and produce useful outputs with fewer edits.

Multimodality and tools

GPT-5 expands multimodal capabilities and tool integrations, moving beyond text-only interactions and enabling practical tasks like image understanding, code execution, and safe web lookups (when configured).

Safety and mitigations

OpenAI incorporated more refined safety checks and alignment efforts in GPT-5, aiming to reduce harmful or biased outputs compared to earlier releases. Nonetheless, no model is perfect and responsible use is essential.

Practical takeaway: GPT-5 is not just "more of the same" — it offers measurable improvements in reliability, context depth, and practical tool-driven utility.

9. Ethical Considerations and Limitations

As with any powerful technology, GPT-5 raises important ethical and practical questions. This section outlines the main concerns and how they are typically addressed.

Bias and fairness

Language models learn patterns from the data they are trained on. If the training data contains biased or unrepresentative perspectives, the model can reproduce or amplify those biases. Mitigation requires careful dataset curation, testing across demographics, human review, and post-processing rules.

Misinformation and hallucinations

GPT-5 can sometimes produce incorrect or misleading statements confidently. Responsible deployments use grounding tools (search, citation systems), require human verification for critical tasks, and instruct the model to provide sources or indicate uncertainty.

Privacy concerns

Models trained on broad datasets must be designed and released in ways that minimize exposure of private or identifiable information. Platform-level controls and careful policy enforcement are crucial.

Job impacts and augmentation

GPT-5 will change how certain tasks are performed — automating routine writing, research, or synthesis tasks. While some jobs may be disrupted, many roles will be augmented: humans working alongside AI to increase productivity and focus on higher-value work.

Security and misuse

There is a risk that bad actors could misuse powerful language models to craft persuasive disinformation, phishing, or social-engineering attacks. Mitigation includes usage policy enforcement, abuse detection, and limits on automated high-volume generation in sensitive contexts.

What GPT-5 cannot do

- GPT-5 cannot access private systems or personal accounts unless explicitly connected with secure, consented integrations.

- It cannot replace expert judgment in high-stakes domains like medicine, law, or safety-critical engineering.

- It does not possess beliefs, consciousness, or intentions — it generates statistically likely continuations of text, not original experiences.

10. Deploying GPT-5: Best Practices and Implementation

Organizations that want to adopt GPT-5 should follow pragmatic best practices to get value while managing risk.

Define clear use cases

Start with limited, high-value tasks where the model can augment human work: drafting, summarization, idea generation, or internal automation. Avoid starting with open-ended, critical, high-stakes tasks.

Human-in-the-loop (HITL)

For sensitive or consequential outputs, keep a human reviewer in the workflow. Use the model to accelerate human tasks, not replace essential decisions.

Data governance and privacy

Maintain clear policies about what user data is sent to the model, store minimal logs, and apply access controls. When using personalization or memory features, ensure explicit user consent and clear delete/forget flows.

Testing and evaluation

Before large-scale deployment, evaluate the model on representative inputs, measure hallucination rates, bias, and correctness, and create fallback plans for critical failures.

Monitoring and feedback

Deploy runtime monitoring to detect unexpected behaviors, and set up mechanisms for users to report errors. Continually improve prompt engineering and fine-tunes based on real-world feedback.

11. The Future of GPT Technology

GPT-5 is another milestone in a long trajectory toward more capable AI assistants. Looking forward, a few themes are likely to shape the next stages.

Tighter human–AI collaboration

AI will increasingly be designed for collaborative workflows: drafting, critiquing, iterating with humans in the loop. This augmentation model emphasizes complementarity, not replacement.

Smarter tool chains

Future systems will connect models to richer tool chains — specialty calculators, domain-specific knowledge bases, verified databases, and safe code sandboxes — to improve accuracy and trustworthiness.

Privacy-preserving personalization

Expect improvements in how models personalize while preserving privacy: on-device models, federated learning, or encrypted memory features that let users benefit from personalization without exposing raw data.

Continued ethical oversight

Policymakers, researchers, and industry will keep pushing for clearer rules, transparency, and accountability frameworks. These will influence how models are trained, tested, and released.

Long-term research directions

Research will continue toward models that reason more reliably, learn from smaller datasets, and integrate multimodal understanding more deeply — moving incrementally toward systems that can plan, verify, and collaborate across tasks.

12. Conclusion: GPT-5 as a Milestone in AI History

GPT-5 represents a pragmatic leap in language AI: more capable, more adaptable, and more useful across real-world tasks. It is not a magical replacement for human expertise, but rather a powerful tool that can multiply human creativity and productivity when used responsibly.

Readers and organizations should approach GPT-5 with curiosity, rigor, and attention to safety — focusing on integrating the technology in ways that amplify human potential while minimizing harm.